Automatic rotoscoping could speed up a very labor-intensive part of a production. It is therefore interesting to look at where people are so far in research into algorithms that can make this happen. In this blog we look at 4 different, recent techniques and programs that strive for (semi-)automatic rotoscoping.

Comixify

Comixify is a project born from 5+ years of research at the University of Warsaw, Stanford and Columbia. Initially it was a platform intended to automatically convert a video into a stylized comic. But during 2020 they expanded their arsenal and added, among other things, automatic rotoscoping, driven by artificial intelligence.

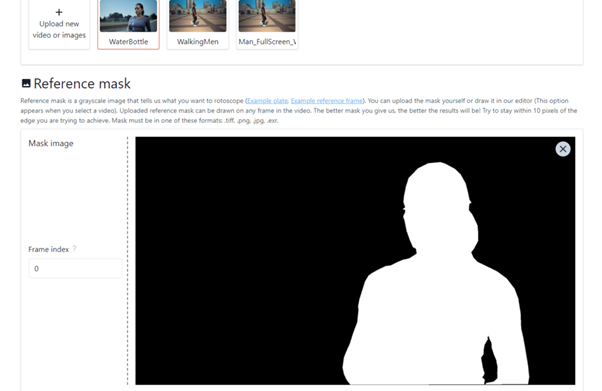

Usage

Comixify works with reference frames that have already been rotoscoped. This means that you have to manually rotoscope a few frames yourself; it is recommended that these are important moments in the video where the AI could possibly have problems. After providing a few reference frames, the video is processed automatically, so no further manual input is required/possible.

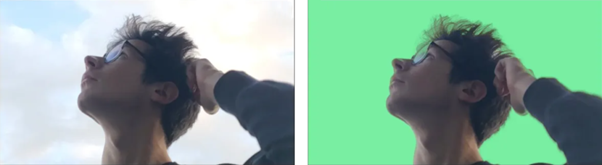

Background Matting V2

Background Matting V2 is een automatisch rotoscoping algoritme ontwikkelt door de universiteit van Washington en gepubliceerd in het begin van 2021. Background Matting V2 vertoont veel potentieel in zake details omtrent het haar en de mogelijkheid om real-time te kunnen werken (60 FPS HD / 30 FPS 4K op een GTX 2080 TI).

Usage

Using Background Matting V2 is very simple, all you need to provide is your video and a photo of the background of your scene, without your subject. Because the algorithm requires a photo of the background, there is a major limitation on what types of videos we can use. The camera must also not move, cameras that are held by hand and show minimal movements can be used provided this is indicated in the options.

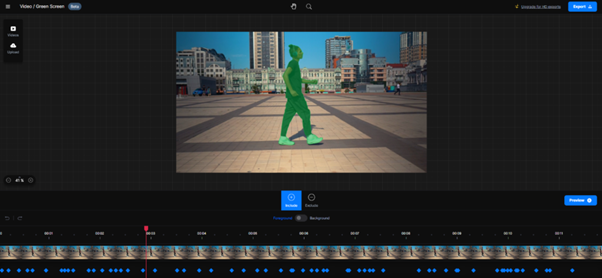

RunwayML

RunwayML is a program that uses machine learning to automatically detect and track your chosen subject throughout the video. It has certainly made its mark since it has been used by Google, New Balance and Facebook, among others. RunwayML does not require any installation, everything happens from your browser, so you don’t need a high-end PC since everything happens online.

Usage

Rotoscoping with RunwayML is very simple, it does not require any tutorial to get started. All you have to do is indicate your subject, using points that you place at the locations that you (not) want to rotoscope. RunwayML will then automatically predict for the entire video what exactly it should cut out. RunwayML will most likely not indicate everything correctly from the first time, so you can manually adjust each frame yourself by placing points again. After which the video, with this additional information, is rotoscoped again. There is also a downside to RunwayML’s simplicity, which is that there aren’t many manual options to perfect your rotoscope. So you cannot always indicate the outline of your subject super accurately.

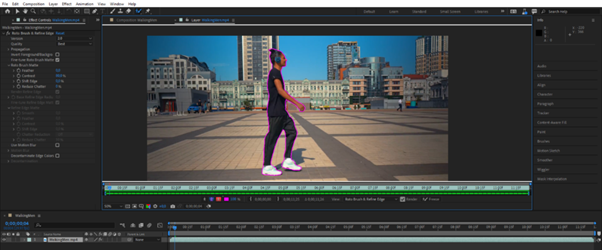

Roto Brush 2

Roto Brush 2 is a tool found in Adobe After Effects. Roto Brush 2 also uses AI to follow the edges of your subject. It can be compared to RunwayML but with more options for manual input.

Usage

Just like RunwayML, it is required to indicate your topic. You do this by moving a brush over your subject, just like Photoshop’s “Quick Selection Tool”. After this, After Effects will try to rotoscope the entire video, and again you can improve where necessary. A major advantage of Roto Brush 2 are the different settings that can be used to fine-tune your rotoscope. You have options such as “feather”, “Shift Edge”, “Reduce Chatter”, you also have a refinement tool, which you can use to better capture details such as hair.

Testing

To obtain a complete picture of the strengths and weaknesses of each technique, they have been tested on different types of fragments and compared with each other. In almost every fragment there is an aspect that the AI may have difficulty with. The videos are always in HD or 4K. Background Matting V2 has been tested with other videos by requiring a photo of the background without a subject.

Standard video

We not only look at videos that can be challenging for the AI, but also fragments that are less difficult to rotoscope. This video includes a person walking through a square and being followed by a camera. The person is always in the center of the screen.

Semi-transparency

Semi-transparency can make it more difficult for the algorithm to identify whether the object is in the foreground or background as the difference between the pixels in the semi-transparent object and the pixels in the background are very similar.

In and out of view

In this case, we test whether the algorithm knows how to detect when the subject leaves the screen and, more importantly, whether it knows how to recognize when it comes back into view.

Obstruction & movement

Ten laatste hebben we een fragment waarin zowel de camera als het onderwerp beweegt. Ook wordt het onderwerp doorheen de video belemmerd door enkele pilaren. Dit is veruit het meest uitdagende voorbeeld die we testen op de AI.

RunwayML

The low resolution is due to the non-paid version of RunwayML. RunwayML’s results look solid, but still show some irregularities. Even in the simpler video, where a person walks through a square, we see artifacts near the shoes and the gap created between the arm and the body. Even after manual intervention, the algorithm was unable to correct its errors. RunwayML has no problems with semi-transparency, some manual adjustments were needed but nothing out of proportion. A helping factor could be the slowness of the video, but further research found no correlation between the speed of the video and the accuracy of the rotoscope. The whole process took 17 minutes. Detecting subjects walking in and out of the scene was done automatically. But another problem came to light when manually improving the rotoscope in RunwayML. When a frame contains multiple subjects that are separated from each other. And if you want to adjust this frame by better indicating one of the subjects, the information about the other subject is deleted, so you have to select it all over again. This is very counterproductive, especially when you have to adjust multiple frames. As a result, this entire process took 55 minutes. An additional problem is the gaps between the hands that are not cut correctly, most likely because they are too small to detect as all the algorithms showed problems with subjects that only occupied a small part of the screen. Finally, looking at the results of the most complicated video, we notice that it has difficulty tracking the edges of the subject consistently. And again the same problem arose, multiple subjects to be rotoscoped (the body to the left and right of the pillar), which also made this whole process take 55 minutes. And with this the result is not even ideal. In the last two clips you can also see some instances where parts of the subject have been cropped incorrectly.

Roto Brush 2

Even though Roto Brush 2 is the most manual technique, the rotoscopes created look promising. Starting with the first video, there are some artifacts visible around the shoes. This is due to a combination of trying to track the laces of the shoe, and moving the shadows. Shadows are a problem with both Roto Brush 2 and RunwayML. This is usually where the most manual intervention is required. Semi-transparency is also no problem, provided some minor adjustments were made, which took a total of 15 minutes. Roto Brush 2 does not detect when the subject reappears in the scene. But this is not a big problem because when you indicate the subject again, it is automatically followed again. Just like with RunwayML, cutting out the zones between the hand seems a bit more difficult. Even with the complex video, Roto Brush 2 manages to produce a reasonably accurate rotoscope. The edges are more consistent than those of RunwayML, but some artifacts are still visible. Making this complete rotoscope took 26 minutes.

Comixify

Although Comixify rotoscopes the simple video best out of all the other techniques, it does the worst on the other fragments. Looking at the first video, the only flaws seen are again around the shoes. He manages to cut away the hole at the arms, although not completely. When tracking both subjects, who go in and out of the scene, things go completely wrong. It seems that it has difficulty tracking two subjects at a time. Finally, at the last clip there is an abundance of artifacts. Separating the foreground and background is usually incorrect, and detecting the subject beyond the bar either doesn’t happen or happens too late.

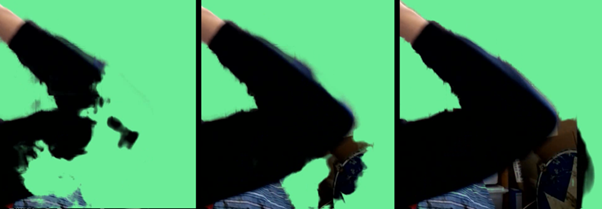

Background Matting V2

Although Background Matting V2 required different videos, due to the requirement of a background photo and the (almost) no movement of the camera, we still have the same approach. The goal is to still investigate the limitations, such as half transparency, obstacles, …

Findings

The findings we got did not match the results published by the scientists. Most of the time we got remarkably detailed results around the hair, but huge artifacts emerged elsewhere. And this was not an isolated case, the same problems arose in all dozens of fragments.

Research

Several possible videos have been tested. The difference between 720p, HD and 4K is there, 4K produces better results than 720p & HD, but still not accurate enough to meet expectations. Different types of videos also gave the same results: indoors & outdoors, 1 subject & multiple subjects, etc.

The manual input that Background Matting V2 provides is minimal. There are some parameters that can be changed that will affect the final product. Of these parameters, only one has a major influence on the shape of the rotoscope. As seen in the example above, it is very difficult to determine what the best value is for this parameter. And due to the lack of manual input, you can’t decide where these improvements take place. And a correct value for 1 location can create a completely incorrect image in another part of the video. This was also not a solution for an accurate rotscope.

Conclusion

We are not yet at the point where automatic programs and techniques can take over the complete rotoscope process. But the techniques that exist today can certainly provide a helping hand, especially in the first rough phase of a production.

RunwayML

RunwayML is a very user-friendly program for fast and rough rotoscoping. But if you want to go into further detail, the absence of many manual options is a major hurdle and you will still be more inclined to work with Roto Brush 2. Due to the absence of manual options, RunwayML works best with fragments where the subject is very clearly separated from the background, and where no too small details need to be rotoscoped. It is also best to switch to a different technique with videos that contain multiple topics. RunwayML can certainly be used productively, provided you use fragments that suit the algorithm.

Roto Brush 2

Roto Brush 2 is the best technique to automatically rotoscope fragments. It works well at automatically tracking your subject, and it’s easy to make manual adjustments yourself through the arsenal of manual settings on offer. But make no mistake, it is not that you press 1 button and everything is done for you, you will still have to spend time optimizing the rotoscope created. You can look at Roto Brush 2 as a major upgrade from RunwayML. There are more options available and rotoscoping of more complicated videos is possible.

Comixify

In the state that Comixify is in now, it is not yet a profitable technology. The accuracy of the rotoscopes produced is still too inconsistent. And since you have no insight into the entire process, you can only see at the very end what and where something went wrong. There is indeed a future for Comixify. We shouldn’t forget that Comixify was able to create the most accurate rotscope from the first, simple video. Knowing that the creators are busy both improving the algorithm and producing plug-ins for existing software, you should not lose sight of Comixify.

Background Matting V2

Finally, we have Background Matting V2, a technique that looked promising, but did not give the results that were expected. If you need a good detailed rotoscope of hair, Background Matting V2 can certainly be used, but anything outside of that hair will not be cut out correctly. None of the videos we used were accurately rotoscoped. And even after searching for the optimal scene with the correct settings there was no success. This means that this technique is not suitable for creating automatic rotoscopes, and further evolutions of this technique are still awaited.

Links

RunwayML: https://runwayml.com/green-screen/

Background Matting V2: https://grail.cs.washington.edu/projects/background-matting-v2/

Comixify: https://comixify.ai/information-vfx