Experiment: Aged-Up Deepfakes

From this TETRA research project AI in production, we have prepared an introductory workshop on deepfakes to give to the DAE students of the VFX major. During the annual creative week, all regular courses for first and second year students are canceled and replaced by creative sessions. Our goal was to provide an introduction to deepfakes from the research group: what is deepfake technology, what can you do with standard software without heavy programming yourself, what are the points of interest if you want to get started with it, …

Due to the lockdown, the creation week was canceled this academic year, but the preparations for the workshop were already ready. The workshop with students will be moved to a later date in one of the VFX classes, but we are happy to share the approach of the workshop with the guidance group here. Read about the limits of this technology and how we tackled Star Wars in a hands-on use case with the combination of different tools.

Reinforcement Learning to find bugs

In this overview post we show how Reinforcement Learning (RL) can be applied to testing games. We do this by means of several papers and use cases. We show how you can detect errors in level design and game breaking bugs in this way. It may be helpful to read our introduction to RL first if you are unfamiliar with the concept.

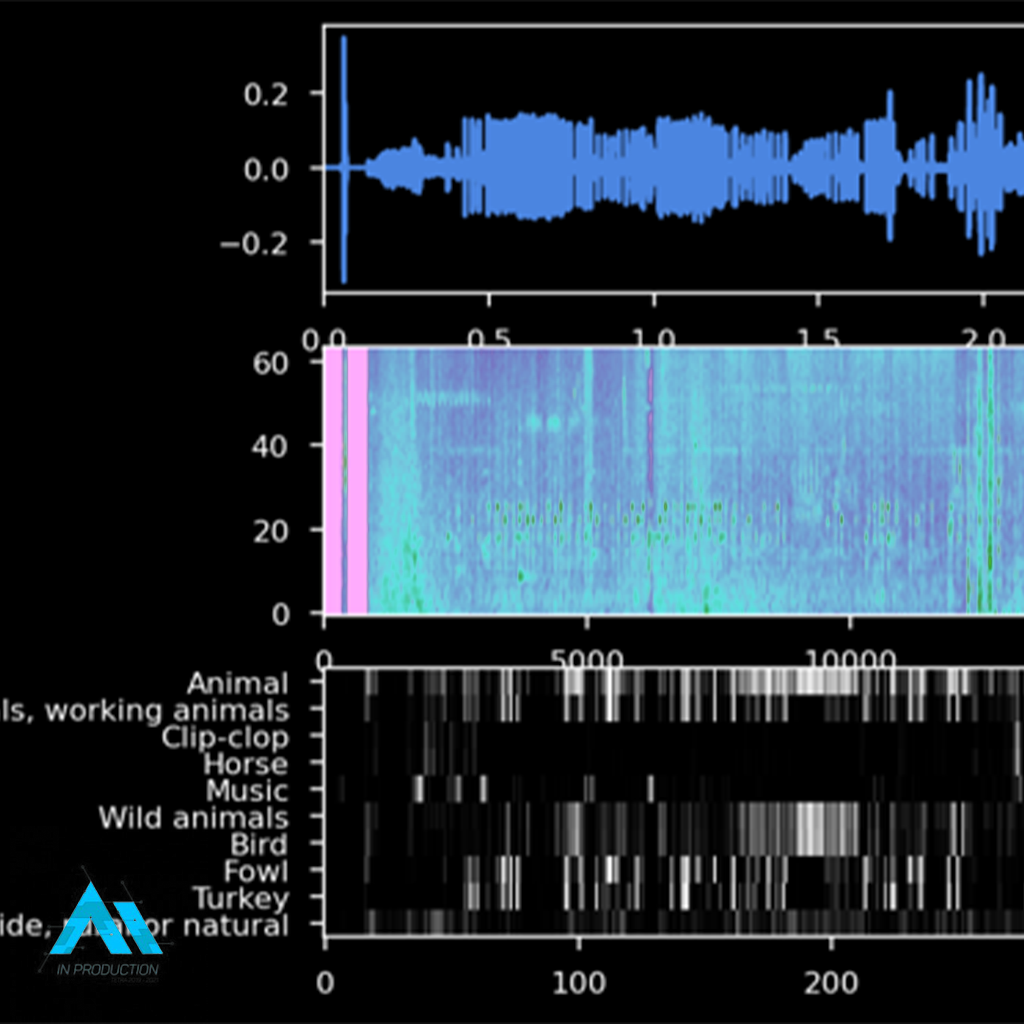

Audio in Games

Audio is an essential element to the success of any game. It provides an immersive experience that can make the difference between the player enjoying a game or becoming frustrated and giving up.

Delayed, missing or incorrect sound effects can seriously affect the immersion of the game. Additionally, inconsistent, or sudden changes in volume of the background music can break immersion.

With this use case we want to investigate what the impact of AI for audio in games can be, and if problems such as error detection in sound effects and music can be addressed.

This may or may not bother players while playing, however, the right sound effects or background music can set the atmosphere while playing a game. Additionally, audio is an important element in determining what is happening in the game at any given moment. This can be illustrated by moments such as enemies sneaking up on you from the side, giving them the advantage to attack.

Unfortunately, audio issues still exist in games. They can be caused by several factors, including code changes, assets that are not properly optimized, or simply the way the game is played. We refer to these as bugs.

Actively detecting audio bugs requires quite some time. Furthermore, these are sometimes overlooked because game testers give a lower priority to audio. For example, people sometimes test without their headphones or with the volume set to 0, because detecting graphical and functional bugs involves a lot of repetition, which means that sound effects are also played ad nauseum.

In this use case, we explore ways to automatically detect audio bugs during development. This can help you identify and resolve them before they cause problems for the players. However, we’ll ignore the more nuanced challenges with audio, as detecting technical things like room temperature are too niche to delve into further.

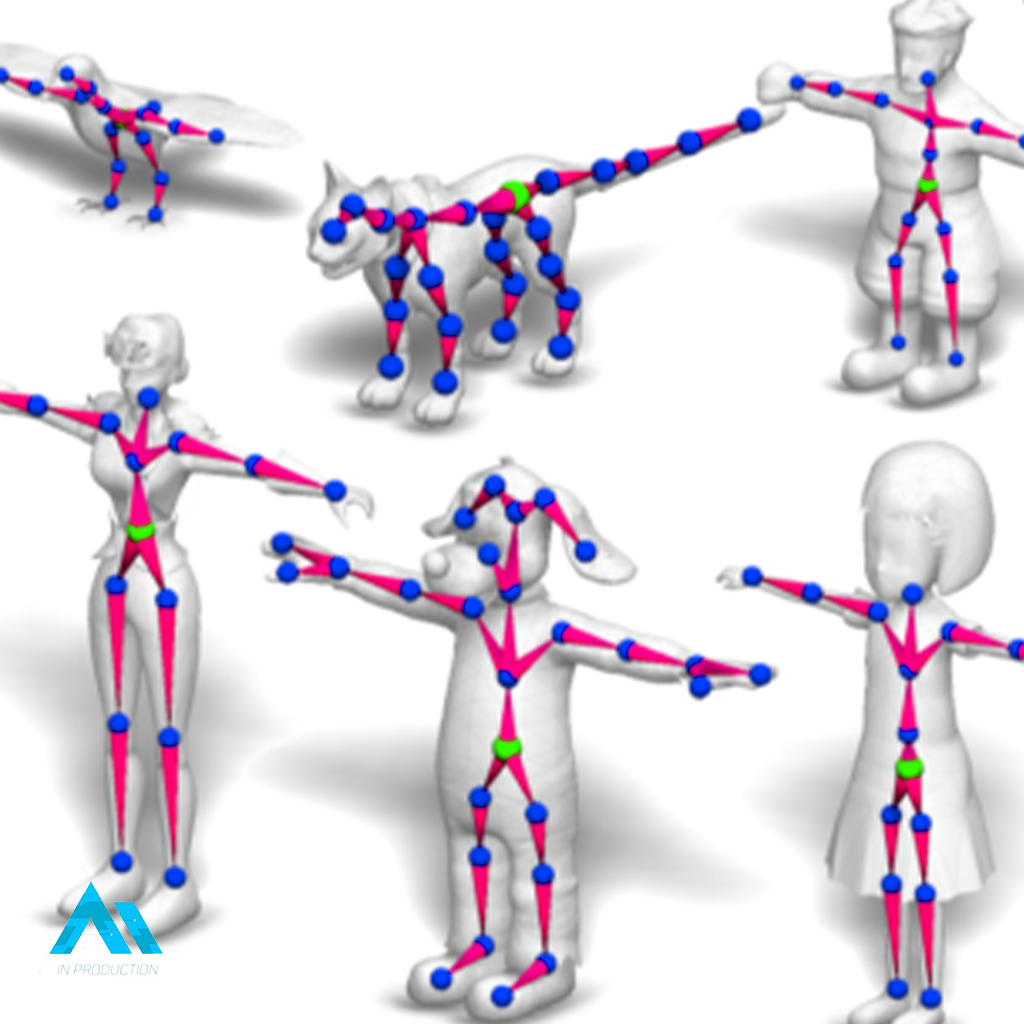

Overview: Automatic Rigging

To date, creating a rig for a model and assigning the correct blending weights has been a manual process. This rig is required to further animate your model, and is therefore a crucial step in the animation pipeline. In this overview we look at whether it is possible to automate this phase, which would be a big step forward in accelerating the animation pipeline.