Audio in Games

Audio is an essential element to the success of any game. It provides an immersive experience that can make the difference between the player enjoying a game or becoming frustrated and giving up.

Delayed, missing or incorrect sound effects can seriously affect the immersion of the game. Additionally, inconsistent, or sudden changes in volume of the background music can break immersion.

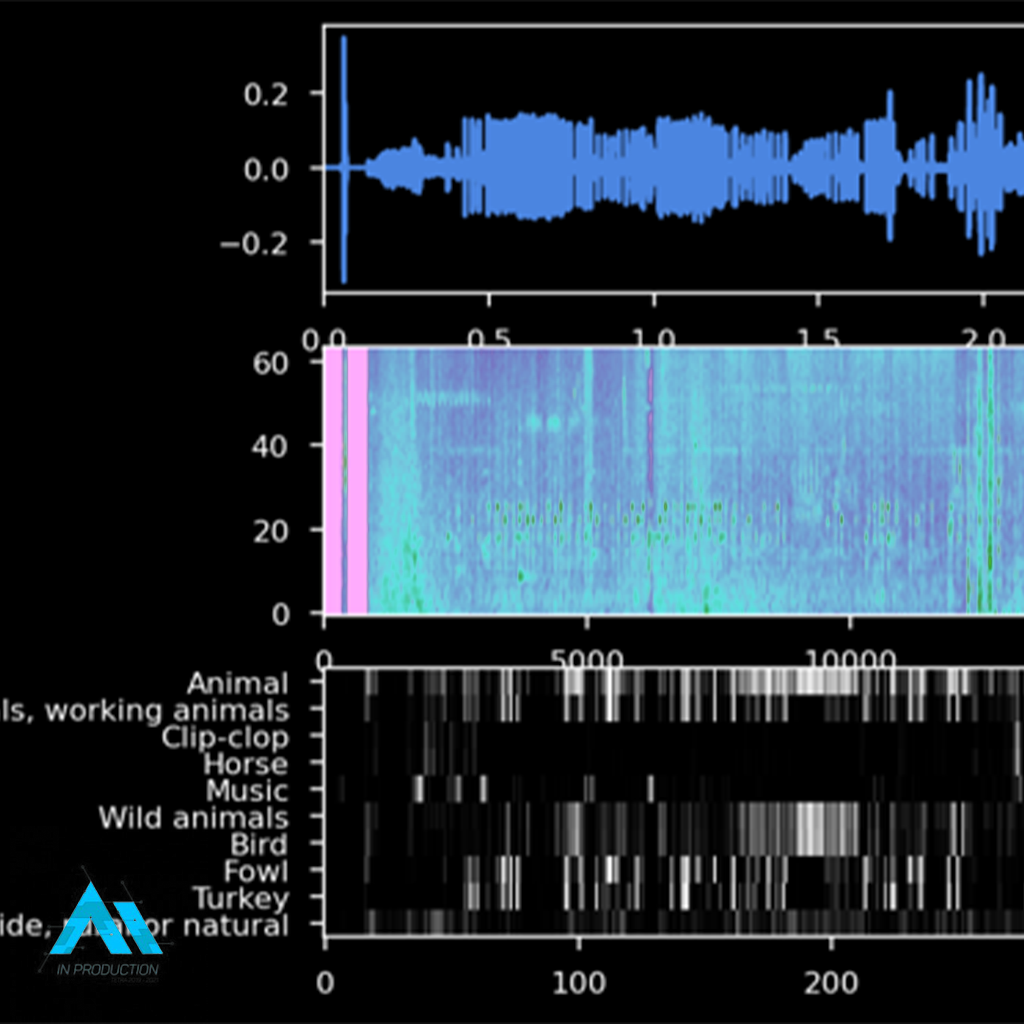

With this use case we want to investigate what the impact of AI for audio in games can be, and if problems such as error detection in sound effects and music can be addressed.

This may or may not bother players while playing, however, the right sound effects or background music can set the atmosphere while playing a game. Additionally, audio is an important element in determining what is happening in the game at any given moment. This can be illustrated by moments such as enemies sneaking up on you from the side, giving them the advantage to attack.

Unfortunately, audio issues still exist in games. They can be caused by several factors, including code changes, assets that are not properly optimized, or simply the way the game is played. We refer to these as bugs.

Actively detecting audio bugs requires quite some time. Furthermore, these are sometimes overlooked because game testers give a lower priority to audio. For example, people sometimes test without their headphones or with the volume set to 0, because detecting graphical and functional bugs involves a lot of repetition, which means that sound effects are also played ad nauseum.

In this use case, we explore ways to automatically detect audio bugs during development. This can help you identify and resolve them before they cause problems for the players. However, we’ll ignore the more nuanced challenges with audio, as detecting technical things like room temperature are too niche to delve into further.

Scalable bodies & clothes

To provide clothing for a digital character, simulations are done with 2D patterns that are draped around a body. This is a process that can take a lot of time, because the simulation takes time and the 2D patterns may have to be adjusted with each iteration to adjust the result of the simulation.

If the same clothing needs to be draped on different characters or avatars, it can be a lot of work to adjust the patterns and simulation settings so that the final clothing fits the same way on different avatars. The aim of this use case was to see from the perspective of the entertainment sector and the clothing industry how we can automate the scaling of clothing simulations between different avatars using 3D procedural techniques.

Runtime procedural generation

There is software to build procedural 3D content in a modular way. In addition, the 3D content is often generated in advance and can be used in a real-time environment such as a game engine in the form of pre-made or pre-baked assets. In this way of working, it is not possible to adjust the procedural structure or manipulation of parametrically constructed elements during the execution of the real-time application. This means that a procedurally constructed 3D model or environment cannot dynamically adapt to real-time user input, for example based on gameplay in the context of a game or the scanned environment in the context of an augmented reality application.

Houdini propping pipeline

Propping in the game design and VFX world is dressing up spaces and landscapes with objects. This can range from placing stones and trees in a large park to decorating an attic room with cardboard boxes and documents lying around. Placing objects in a room is still often a manual process. The procedural propping pipeline is an example of a streamlined working method that does not detract from the artistic process.

Procedural generation in Virtual Reality

Runtime procedural 3D is still mainly used for the same purposes: world generation, player creation and world destruction. The goal of this project was to explore runtime procedural 3D in which the artist can modify the world using VR controllers, giving artists more tools to build the world than just the editor and inspector.

Azure Kinect body tracking character creator

The visitor stands in front of a screen. Two portraits of lords of the castle are shown on the screen. The visitor can select a lord of the castle by effectively moving his hands and clicking on it virtually. Once chosen, the visitor can dress up a character with items of clothing from the lords of the castle. Each choice is commented via audio. It is in this way that the visitor gets to know the characters better.

Virtual Reality escape room

For some prototypes, specific attention was paid to historical stories and events. How can Immersive Technology help to tell these stories in an interactive way? Can we make visitors feel like they are part of this? Allowing them to discover the story themselves through certain interactions without presenting it to them ready-made?

Deze vragen gaven, in combinatie met enkele van de ideeën die tijdens de brainstormsessies werden geopperd, aanleiding tot de ontwikkeling van twee prototypes waarbij role-playing, groepsbeleving, interactiviteit, gameplay en Storytelling centraal stonden.