To demonstrate what’s possible with Augmented Reality (AR) today, we set out to build an immersive musical experience. Our inspiration came from the Gorillaz’s “Skinny Ape” AR performance, which debuted alongside their single of the same name. That experience showcased how game technology can be used to create new, engaging fan experiences.

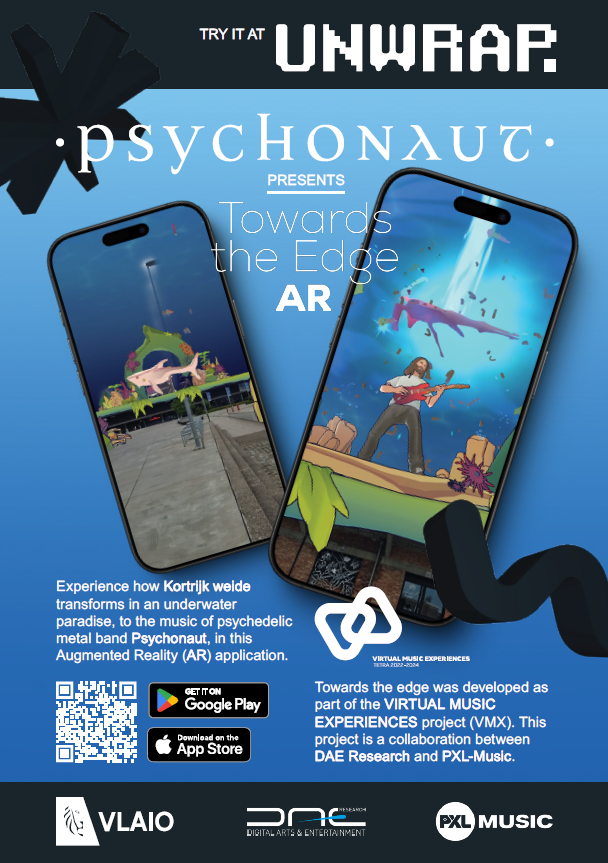

For our own experiment, we were fortunate to collaborate with Psychonaut, a Belgian psychedelic post-metal band, who kindly allowed us to use their music as the foundation of this AR experience.

A Group Project with Real-World Impact

This project initially took shape as a group project commissioned by DAE Research. In such projects, groups of Digital Arts & Entertainment students work for an entire semester on a game or interactive assignment. They go through the full pipeline—from meeting with clients (in this case, DAE Research and the band Psychonaut), to developing a prototype, and finally polishing the complete experience.

Because students can dedicate four out of five days a week to the project, it allows for a deeper and more ambitious scope. That said, the first version of the experience leaned too much into being a game, and too little into being a musical experience. It lacked sufficient integration between the 3D elements and the physical environment, and didn’t fully demonstrate the capabilities of modern AR.

Augmented Reality: A State of the Art Overview

But what exactly can AR do today?

Most people are familiar with Pokémon Go, where 3D creatures appear in your environment via your smartphone. In that case, 3D assets are roughly anchored to your surroundings using GPS and simple tracking, overlaying the camera feed.

With QR codes, it has long been possible to track a phone’s position relative to the code and place 3D content accordingly.

Visual Positioning System (VPS)

More recent advancements allow for camera-based localization using recognizable features in your environment. This technique is known as VPS (Visual Positioning System). It combines your phone’s GPS, camera, gyroscope, and other sensors to identify your exact position by comparing live imagery to a pre-existing database of environmental scans.

This level of accuracy—often down to just a few centimeters—is crucial for realistic integration of 3D elements into physical spaces. In our Psychonaut AR experience, we used VPS to anchor 3D visuals to real-world buildings around Kortrijk Weide, where the experience takes place.

Scene Semantics

Another common AR technology is scene semantics, which analyzes your camera feed and assigns a label to each pixel—such as sky, building, person, or tree. This allows for highly specific effects, like displaying 3D content only on certain types of surfaces or applying custom visual styles. In our project, this technique was used to apply a surreal underwater effect to the sky.

Depth Estimation

Depth estimation helps determine the distance between objects in the camera view. This allows 3D content to appear in front of or behind real-world objects, adding layers of realism. While this works well for nearby elements, it struggles with distant objects like large buildings.

To solve this for our location, we created invisible 3D versions of the Kortrijk Weide buildings. These “ghost” models occlude the 3D content, making it appear as though, for example, fish swim behind the buildings.

Light Estimation

Lastly, light estimation analyzes the real-world lighting conditions to simulate realistic shadows and lighting on the 3D elements—enhancing immersion even further.

All of these technologies together help blend digital content seamlessly into our surroundings, creating more believable and immersive AR experiences.

Implementing the Location

When it came to enabling VPS functionality, we had a few options. One was Google’s ARCore, which uses their vast Street View image library to create environmental models. Unfortunately, at the time of development, Kortrijk was not available in the ARCore VPS database.

Instead, we opted for Niantic’s Lightship plugin, which allows users to not only use existing public scans, but also upload and use private scans. Thankfully, a public scan of Kortrijk Weide was already available, streamlining the process.

One major difference: ARCore allows you to import a 3D map of the environment directly into your game engine to position objects accurately. Niantic Lightship lacks this functionality. To compensate, we used satellite imagery from Google Maps and manually aligned our elements—through trial and error—to ensure correct scale and placement.

Art Direction

In collaboration with the band, the students chose an underwater world with a stylized, cel-shaded look: bold outlines, strong shadows, and vibrant colors. To enhance the sense of immersion and tie the 3D elements to the real world, many of the models were placed on or against buildings.

The underwater theme was reinforced by replacing the sky, and adding particle effects such as air bubbles, glowing jellyfish, and schools of fish.

For the avatars of the band members, we used Ready Player Me, a free tool that generates stylized, pre-rigged 3D characters compatible with multiple game engines. Once imported into Unity, we swapped their default shaders for our custom cel-shaders to match the environment’s look.

Animation was handled using free assets from Adobe Mixamo, making it easy to bring the avatars to life.

A Music Video Reimagined in AR

By combining these artistic and technical elements with a simple storyboard that triggers different animations and effects based on the intensity of the music, the end result is an immersive AR music video. A unique experience where the real world transforms, and the band and their music take center stage—redefined through the lens of augmented reality.