As part of our ongoing exploration of how XR technologies can enrich musical expression, we set out to build a complete digital twin experience—not just of a musician, but also of the venues they perform in. The project investigates how accessible and efficient it can be to create virtual performances that preserve both the performer’s likeness and the acoustic identity of real-world spaces.

To do this, we brought together multiple technologies: photogrammetry, MetaHumans, acoustic analysis, motion capture, and virtual reality. The result is a fully immersive VR experience where a pianist performs in highly detailed 3D-scanned venues, with audio-reactive acoustics and accurate virtual instruments.

Step 1: Scanning the Musician – From Reality to MetaHuman

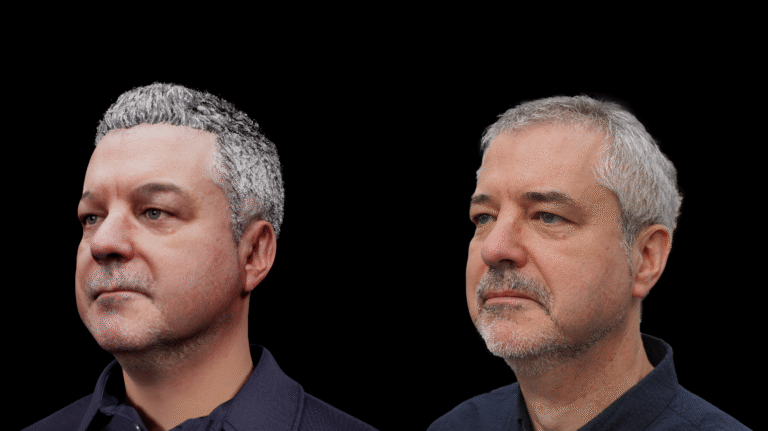

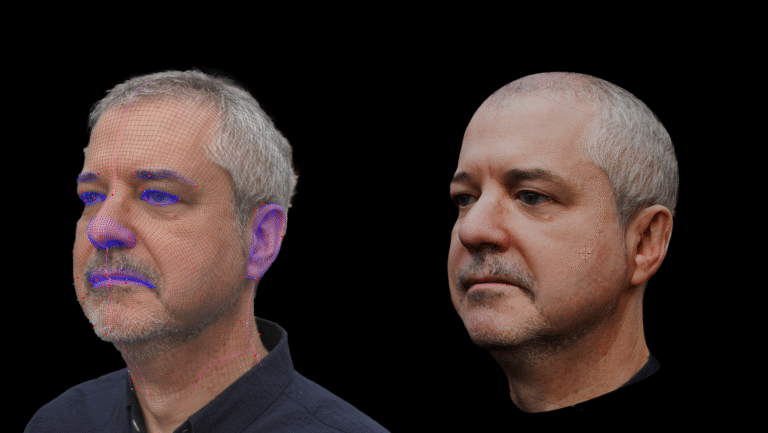

We began by creating a digital twin of our test subject, Orpheus researcher and pianist Tom Beghin. Our goal was to build a MetaHuman replica that was not only realistic but also ready for animation and live performance simulations.

Using photogrammetry, we captured a detailed 3D model of Tom’s head with over 100 photographs taken simultaneously using our high-end scan rig at Campus The Square (Kortrijk). This model was processed in RealityCapture, and the head mesh was then imported into Epic Games’ Mesh to MetaHuman tool to create a lifelike facial structure.

To improve visual fidelity, we used FaceBuilder by KeenTools in Blender to fine-tune the geometry and generate a custom facial texture. This approach captured unique features like skin tone, wrinkles, and blemishes, significantly enhancing the MetaHuman’s resemblance to Tom.

Step 2: Scanning the Venues – Digital Stages for Virtual Performance

To complete the immersive setup, we needed digital replicas of performance spaces. For this, we collaborated with RealVisuals, who scanned two venues using advanced photogrammetry techniques:

De Kreun (Kortrijk): Captured using a portable scanner combining photogrammetry and infrared for high-detail indoor scans.

d’Hane Steenhuyse: A historically protected room where the floor cannot be stepped on. This space was scanned using drone-based photogrammetry, offering a unique opportunity to stage a virtual performance in a location where no physical concert would be allowed.

These environments were reconstructed as fully navigable 3D spaces within Unreal Engine 5.

Step 3: Capturing Acoustics – Virtual Sound in Real Spaces

Our colleagues at PXL Music Research added an essential layer to the project: acoustic analysis. They performed audio scans of both venues, capturing the reverberation and spatial sound profiles unique to each room.

This data was integrated into the virtual performance using Ableton Live, enhanced with MAX for Live audio tools. During the VR experience, the viewer can switch between the two venues using a controller. When the venue changes, the acoustic response changes in real-time, giving users a tangible sense of how space impacts sound.

Step 4: A Virtual Concert – Pianist, Piano, and Performance

The piece was performed on a Stein Piano, which we recreated digitally using CAD files for maximum visual and mechanical accuracy. To animate the keys, Tom recorded his performance on a MIDI keyboard, which allowed us to map note data to the virtual keys in UE5.

Additionally, we used motion capture gloves to track Tom’s hand and finger movements while playing. This mocap data was applied to his MetaHuman avatar, enabling us to reproduce the expressive subtleties of his performance in virtual form.

The Final Experience: Fully Immersive and Artistically Accurate

All of these elements came together in a VR experience built in Unreal Engine 5, where users can:

Watch the digitally scanned pianist perform in either venue

Experience how different acoustic environments shape the music

See a fully animated Stein piano respond in sync with the live-recorded performance

Switch spaces on-the-fly, transforming both the visuals and the sound in real time

By combining the latest in 3D scanning, animation, acoustic modeling, and real-time rendering, this use case shows how far we can go in recreating a performance not just as it looks—but how it feels and sounds.

Looking Forward

This project opens exciting avenues for virtual concerts, remote rehearsals, and interactive music education. In future iterations, we aim to further refine body modeling, enable more detailed environmental interactivity, and explore how these techniques can be applied to larger ensembles or non-traditional venues.