Generative AI: The struggle for control

Every year thousands of researchers and industry professionals unite at the SIGGRAPH conference to share state of the art research, best practices, and innovative new workflows with their peers. The conference is primarily a computer graphics conference. It’s unique in that it unites both the fields of film visual effects and real-time graphics, or more specifically video game technology. These days, however, next to a computer graphics conference, SIGGRAPH is also one of the worlds’ biggest AI conferences. This year, a total of 844 papers were sent in, with 252 being accepted, and 134 being presented at the conference across a grand total of 50 different sessions each focussing on a different type of use case.

For years now, SIGGRAPH has been an essential way for us to keep a keen eye on trends and evolutions in the field of applied AI. Keeping up with this rapidly evolving field is always a losing battle – the rate of technological evolution is much faster than any individual person could possibly keep up with. I’ve often compared it to chasing down a train – always arriving one station late. Luckily for anyone in the industry, actual adoption of these technologies tends to be a lot slower. Actually getting the time to learn to work with a new technology, and to understand its benefits and drawbacks, is a slow process. This makes our job as an applied researcher two-fold. On the one hand, we need to keep an eye on what’s possible technologically, on the other, we must keep an eye on the industry itself, and how adoption of these technologies is progressing.

After AI entered the mainstream some years ago thanks to Large Language Models like ChatGPT and image diffusion models like Midjourney and Stable Diffusion, adoption of AI into actual production workflows hasn’t gone as quickly and as wide-spread as many might have expected. There are several reasons for this.

Datasets: The Legal and Ethical Gray Zone

For one, it remains relatively unknown which datasets were used to train many of these models, and how they were acquired remains equally dubious. This results in a legal and ethical gray zone which logically results in hesitancy and caution. This will continue to be an ongoing issue. The AI Act, EU-wide legislation that was approved this year, seemed the most likely route to help resolve this issue, as during the writing process, it was coined that model providers would be required to describe which datasets they used, and how they were acquired. This part of the legislation however never saw the light of day, heavily rumoured to be thanks to lobbying from French and German AI companies who worried this might impact their competitiveness in respect to their American competitors. This, in and of itself, of course, tells you more than enough about the true nature of these datasets. Nevertheless, with the status quo as it is now, with these models being around for some time now, it’s become clear that this is mostly a risk to model providers, the OpenAI and Stability AI’s of the world, and not to the companies who use their models in their workflows.

Retaining Control

Aside from the legal and ethical questions that continue to exist around AI models, what else is slowing down adoption? In the five years I’ve worked as an applied AI researcher, there’s one question I have heard consistently again and again: “How do I get it to do what I need it to do? How do we control it?“. Generating images with text-to-image models is cool, but how do I get it to generate what’s in my mind? Generating text with large language models has a lot of potential, but how do I get it to generate the type of text I need, given the requirements and parameters of my use case? This is, in my opinion, the biggest ongoing challenge with AI. You can attribute this issue to two core problems.

On the one hand, a lot of this innovation happens on university-level academic research. Their prime focus is to innovate, and to further technology, not to create technology that can be readily applied in an industry context. This results in applied researchers, either as part of a company’s R&D department, or an applied research group such as my own, trying to use this technology for something that it was, in truth, not really designed for. This disconnect between academic research and the industry is a larger topic, but I’m glad to see that in recent years, at least in the field of AI, there has increasingly been a discourse between the two, with companies like Google, Microsoft, NVIDIA and OpenAI being heavily involved in both applying these technologies, as well as funding the underlying research.

This has resulted in an enormous stream of research papers focussed solely on solving this core issue of control.

State of the Art Examples

To more closely investigate this trend, let’s take a look at some recent examples presented at the SIGGRAPH 2024 conference in August.

Text to Image

Previous work

Gaining more detailed control over text to image models is an ongoing issue. Early works like Dreambooth and Textual Inversion gave us the ability to add new concepts to text to image models, allowing users to train the model on a small datset of examples of a specific person, animal, object, or even artstyle. Once trained, this new model would then allow us to generate images of this new subject or using this new artstyle. LORA’s were a next iteration that allowed the user create a smaller model that essentially functions as a plugin that could be used with different Stable Diffusion models. (for more, see this article)

SIGGRAPH 2024

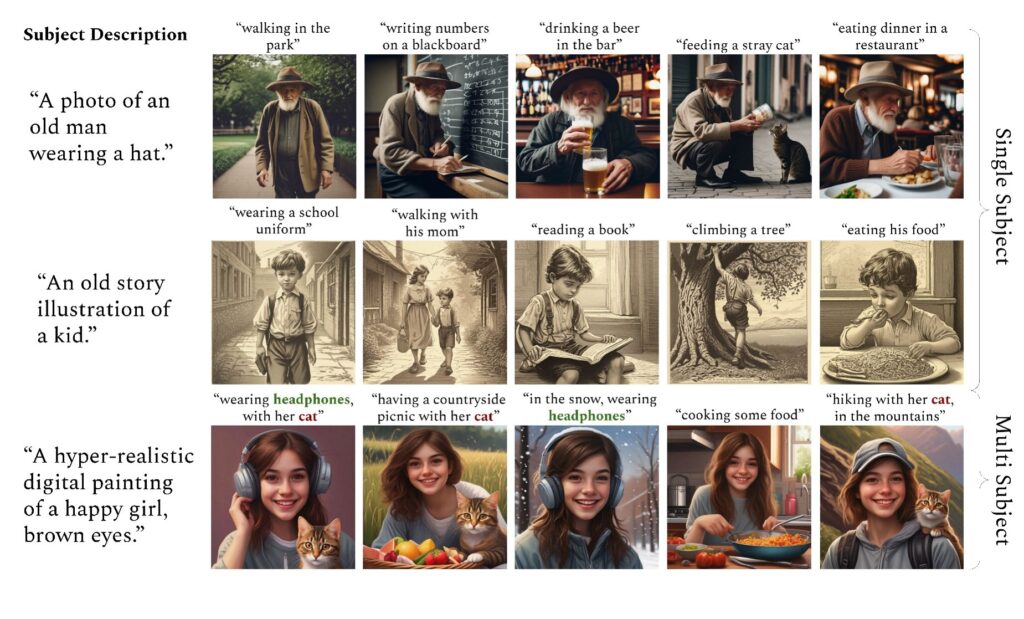

More recently, at SIGGRAPH 2024, researchers presented some new ways for creating sets of images with consistent characters between the different generated images. Some examples include:

- ConsiStory: Training-Free Consistent Text-to-Image Generation

- The chosen one: Consistent characters in text-to-image diffusion models

Previous work

Another big innovation in recent years was ControlNet. This system allows the user to provide an image, to extract some kind of data from the image, and to use this as part of the image synthesis process. This includes re-using the depth or edges from an existing image, or even extracting the pose of a character.

SIGGRAPH 2024

This paper attempts to give us the ability to adjust the lighting conditions of any image such that we can change the light sources as well as the material properties of the objects

This paper even goes one step further, and, much like ControlNet, allows the user to disect an image into different layers, including an albedo texture layer, a normal layer, and a shading layer, very similar to the way materials work in games. This allows for relighting or retexturing of existing images, or using specific layers of a pre-existing image in the synthesis of a new image.

Text to 3D

This trend of adding more ways of controlling the results of generative AI is of course not limited to 2d image generation.

This paper takes inspiration from 2d image generation LORA’s, and allows the user to select a number of 3D models as inspiration, based on which the model will then be able to generate variations in a similar style as if to extend the set of examples.

Gaussian Splats

After NERF’s, Gaussian Splats have shown to be a really efficient and visually impresssive way of creating a 3D representation of an environment. One of the main difficulties with a Gaussian Splats is how it’s not structured in a way that allows for easy editing. Papers like this one try to tackle this by introducing easy to use ways to add, remove, or modify elements of a Gaussian Splat – in this case by using text prompts and image examples.

Text to Animation

Recent papers have shown the possiblity of generating character animations from text descriptions. While this seems like it could prove useful, previous models still require a lot of trial and error to get the exact animation the user had in mind. Papers like iterative motion editing try to resolve this issue by allowing the user to use natural language to describe the modifications they would like to make, allowing for an iterative workflow instead of trial and error.

Text to Video

With video generation, so far, it’s been nearly impossible to get fine-grained control over the camera motion in the video. This new paper allows the user to control the exact movements of the camera, as well as zoom, and the location of specific subjects within the field of view of the camera. This allows for much more targeted video generation requiring much less trial and error.

Final Thoughts

Looking at all these examples, it’s clear researchers are increasingly adding control into these existing models. They are succeeding, in a lot of ways, yet to me the question remains: will this be sufficient? Will this truly resolve the underlying issue? If you have an idea in your mind and want to make that a reality with text-to-image; will this really allow you to do that? In my opinion, a lot of hurdles still remain in place. And even a few years down the line from now, adding even more ways of controlling these models, will that finally resolve this issue? For each new means of control we add to these models, we increase the complexity of working with them.

The vision we are sold with each launch of an AI models is: “look how simple it is!“. Just write some text, click generate, and just like that, you have an image! or a video! But really – using these models isn’t that easy. At first, it requires a lot of trial and error, which takes time to do. And even then, the results might not be exactly what you’re looking for. In some cases, this can really make you wonder whether this is a better way of working at all. Once we add these means of control onto the model, we decrease the simplicity of using it. We are looking for a sweet spot where we have enough control over a model so that we can make it do exactly what we want, yet not so much means of control that it becomes cumbersome to use, and we might as well have done it the traditional way.

Whenever someone working in a creative industry asks me if they should worry about their job because of AI (a question I get on a very regular basis), I think of this struggle for control. We can generate videos with AI, yes, but what kind of videos? Creating exactly what we need through AI still requires someone with knowledge and experience to exert control over the AI model. In almost all use cases, I see AI models as a supplementary technology. As another tool in the toolbox. Not there to replace entire workflows, but to assists with cumbersome tasks where and when the technology is a good fit for the problem we want to solve. Both understanding where these technologies should and should not be used, as well as actually using them properly, will continue to require a human touch.

Extra: SIGGRAPH Trends and Takeaways

With that heavy topic out of the way, allow me to also include some of my other takeaways from the SIGGRAPH conference this year.

- Generative AI continues to push the boundaries of what’s possible, getting closer to tools that are industry-ready

- Images, materials, 3D, characters, radiance fields, animation, video

- NERF’s are not dead (yet); performance improvements compete with Splats

- Context-aware materials and textures and optimized 3D meshes are now becoming a reality

- AI tool adoption for tools like Cascadeur, Wonder Dynamics, Move AI, Substance Sampler is really picking up in the industry leading to higher quality results on lower budgets

- Diffusion, CLIP, LORA and Controlnet are enabling new AI papers to do previously impossible things in non-image synthesis fields, like animation and 3D

- More and more PC’s have Neural Processing Units (NPU), allowing for AI inference to be run on there instead of on GPU/CPU

- This makes integration of AI models into real-time games much more feasible; think optimizing physics simulations, generating real-time deepfakes, …

- For VFX, Virtual Production has vanished from SIGGRAPH

- Nothing new to be said, no longer news, challenge is adoption, not technological

- Virtual Humans are everywhere

- NVIDIA in particular pushing accessibility, promising advancements in realism from Soul Machines through architectures combining AI models

- The OpenUSD alliance is pushing for OpenUSD to become a real standard, with improvements and more and more adoption in the most widely used software suites. This is the most direct and useful result of the “Metaverse” hype.